Introduction:

What is Deep Learning?

Deep learning is a subset of Artificial Intelligence and a part of the more extensive group of AI. Rather than people programming task-explicit computer applications, deep learning takes unstructured information and trains itself to make progressive and exact actions dependent on the information provided. Deep learning applications learn and solve restricted undertakings without being expressly modified to do as such.

Deep learning, at that point, isn’t only some remote to help people later on. It’s taking care of issues, both commonplace and significant, at this moment: facial recognition is used to unlock phones or distinguish friends in photographs using face recognition, suggestion for video to watch, music choices or when shopping at online business destinations according to your browsing history.

It also facilitates medicinal imaging, for example, diagnosing ailments like malignant growth, spam channels in email, and Mastercard fraud discovery.

Deep learning applications have a keen eye for tracking issues, they can identify irregularities and difference among a lot of information. It’s something people do inherently well, while computer systems cannot actually recognize what’s unique or comprehend what makes an application unique and useful.

People, however, tire effectively in their basic leadership and PCs don’t. That is the reason profound learning, whenever executed for the correct kinds of utilizations related to machine vision, can profit production line and assembling organizations in manners that other developing innovations may take a very long time to result.

Deep learning innovation is being utilized to anticipate examples and settle on basic business choices. This equivalent innovation is currently moving into cutting edge fabricating rehearses for quality investigation and other judgment-based uses, for example, deformity identification or last get together confirmation.

A lot of deep learning depends on crafted by neural systems. At the point when a neural network is being prepared, preparing information is nourished to the base layer, the information layer, and it goes through succeeding computational layers, getting increased and included in complex ways, until it at last shows up, drastically changed, at the yield layer.

1.Including Deep Learning Information in the Mix

Development of crude materials, products, and parts is at the core of any assembling framework. After the upset in registering and data innovation, it was understood that such physical development must be ideally productive when that development is controlled in an exact way, related to several other comparable developments, administered by a data handling motor. Along these lines, a creative mix of equipment and programming has guided the “old ventures” into the period of brilliant assembling.

However, today, manufacturing and assembling industries overall are confronting another issue originating from those very data preparing frameworks. It is the double (and related) issue of the storm of information and data blast.

As the expense and operational multifaceted nature of figuring and capacity diminished at an exponential pace (Moore’s law), the data content created by labourers, machines, controllers, processing plants, distribution centers, and the calculated hardware detonated in size and intricacy in such a way, that it overwhelmed customary assembling associations.

In any case, they have not been distant from everyone else. Indeed, even the data insightful programming and IT associations have needed to face a similar issue in the most recent decade or something like that. Google’s sites and distributions have conceded that the multifaceted nature of their product ventures was getting inconvenient.

The solution to this problem?

Inventive thoughts in the field of deep learning has seen AI acting as the hero in case of numerous product associations from being suffocated in the storm of information and have helped them understand the exabytes of information, that they have to process each day.

While not at a similar scale but manufacturing units around the globe are additionally getting used to utilizing front line propels in these fields to help and improve their activity and keep conveying the most elevated an incentive to their clients and investors. We should investigate a couple of fascinating models and down to earth cases.

2. Probable Deep Learning Applications in Manufacturing

Use of digital transformation and application of strategic modelling techniques has been implemented in the field of manufacturing industry since awhile. Over the years there were multiple insufficiencies in this industry due to lack of knowledge or inappropriate use of resources.

As wasteful aspects tormented worldwide manufacturing in the 60’s and 70’s, almost every large scale association streamlined and embraced great manufacturing technique like the one adopted by Toyota. This sort of method depended on ceaseless estimation and measurable displaying of a huge number of procedure factors and item includes.

Eventually, as the estimation and capacity of such data became digitized, PCs were introduced for building those prescient models. This was the forerunner to current digital analytics.

As the information transfer proceeds, conventional measurable displaying can’t stay aware of such high-dimensional, non-organized information feed. It is here that profound learning sparkles brilliant as it is intrinsically fit for managing exceptionally nonlinear information designs and furthermore enabling you to find includes that are very hard to be spotted by analysts or information checkers physically.

3. Quality Control in Machine Learning and Deep Learning

AI, by and large, and profound learning, specifically, can fundamentally improve the quality control errands in a huge sequential construction system.

Generally, machines have just been viable at spotting quality issues with elevated level measurements, for example, weight or length of an item. Without spending a fortune on extremely modern PC vision frameworks, it was impractical to distinguish inconspicuous visual pieces of information on quality issues while the parts whizz by on a sequential construction system at fast.

4. Predictive Maintenance in Deep Learning

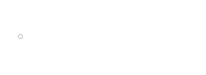

Profound learning models have just demonstrated to be exceptionally compelling in the space of financial aspects and money related displaying, managing time-arrangement information.

So also, in prescient support, the information is gathered after some time to screen the wellbeing of an advantage with the objective of discovering examples to foresee disappointments. Thus, profound learning can be of noteworthy guide for prescient upkeep of complex apparatus and associated frameworks.

Deciding when to lead support on gear is an uncommonly troublesome errand with high money related and administrative stakes. Each time a machine is taken disconnected for support, the outcome is decreased generation or even production line personal time.

Visit fixes convert into clear misfortunes, yet inconsistent upkeep can prompt significantly progressively expensive breakdowns and calamitous modern mishaps.

This is the reason the computerized highlight building of neural systems is of basic significance. Customary deep learning calculations for prescient upkeep rely upon restricted, space explicit mastery to hand-create highlights to recognize machine medical problems. While a neural net can deduce those highlights consequently with adequately great preparing information.

5. Procedure Monitoring and Anomaly Detection

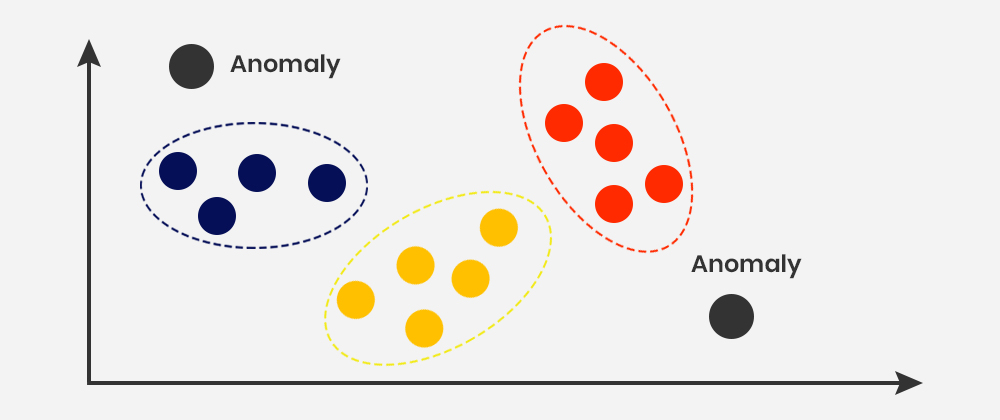

Procedure observing and oddity location is fundamental for any ceaseless quality improvement exertion. All the significant assembling associations use it widely. Conventional methodologies like SPC (Statistical Process Control) outlines have originated from basic (some of the time wrong) suppositions about the idea of the factual circulation of the procedure factors.

As the quantity of commonly connecting factors witnesses a rapid increase and a regularly expanding cluster of sensors get stationary and time-changing information about these factors, the customary methodologies don’t scale with high precision or unwavering quality

This are instances where deep learning models can be of use in a rather unexpected manner. In order to detect anomaly or departure from the norm, often dimensionality reduction techniques like PCA (Principal Component Analysis) is used from traditional statistical signal processing domain. However, one can use static or variational Autoencoders, which are deep neural networks with layers consisting of progressively decreasing and increasing convolutional filters.

Conclusion

Data framework empowered manufacturing has increased profitability and nature of mechanical associations, of all shapes and sizes, for many decades now. In this brilliant assembling setting, use of information examination, measurable displaying, and prescient calculations have expanded significantly, as the quality and inclination of machine-produced and human-created information improved after some time.