Introduction:

A Quick Introduction to Neural Networks

Neural network is basically deep learning using Artificial Intelligence. There are certain application situations that are excessively overwhelming or out of extension for conventional AI calculations to deal with. Neural Nets as they are commonly known, contribute to such situations and fill the gap. It is important for applications to be built in a way that they can cope with most of the situations as the users would stop using applications that keep crashing or require updates to run efficiently.

Artificial neural systems are propelled from the natural neurons inside the human body which actuate in specific situations bringing about a related activity performed by the body accordingly. These artificial neural nets comprise of different layers of interconnected fake neurons controlled by actuation capacities which help in turning them ON/OFF.

Like conventional machine calculations, here as well, there are sure qualities that neural nets learn in the preparation stage. Quickly, every neuron gets a duplicated form of data sources and irregular loads which is then included with static predisposition esteem [unique to every neuron layer], this is then passed to a proper enactment work which chooses the last an incentive to be given out of neuron.

There are different initiation capacities accessible according to the idea of info esteems. When the yield is created from the last neural net layer, deficit work [input versus output] is determined and back proliferation is performed where the loads are changed in accordance leading to saving a lot of money. Finding ideal estimations of loads is the thing that the general activity primarily revolves around.

How do Neural Networks work?

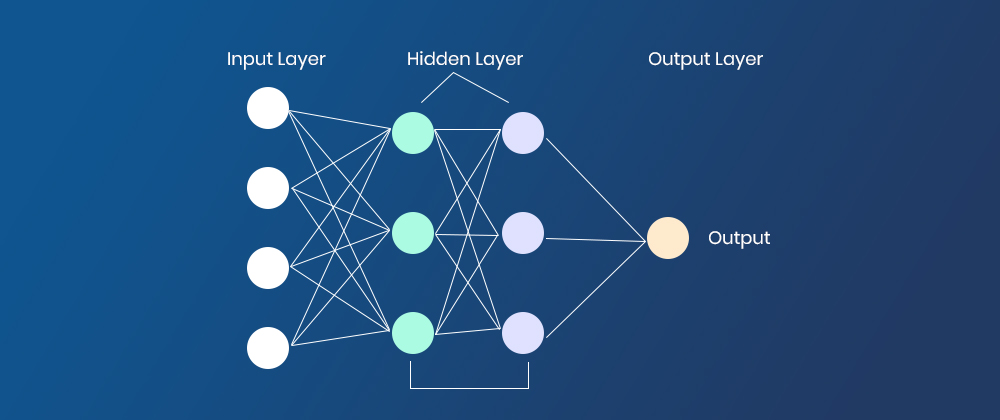

A neural network consists of a large number of processors. The processors are arranged as tiers but function in a parallel order. The top most tier would receive the raw input which would then be processed in all the tiers and the last tier would process the final output. This is exactly how information in human beings is processed when the optic nerve receives raw information.

A tier is made up of small nodes and the nodes are highly interconnected within the framework to the nodes that are situated before and after. Each node in the neural network has its own sphere of knowledge, including rules that it was programmed with and rules it has learnt by itself.

The key to the efficacy of neural networks is they are extremely adaptive and learn very quickly. Each node weighs the importance of the input it receives from the nodes before it. The inputs that contribute the most towards the right output are given the highest weightage.

Now that we know the meaning of these Neural Networks and how these Networks function let us check out the basic types of networks.

There are a total of 5 different Neural Networks, here is a list:

1.Feed Forward Neural Network

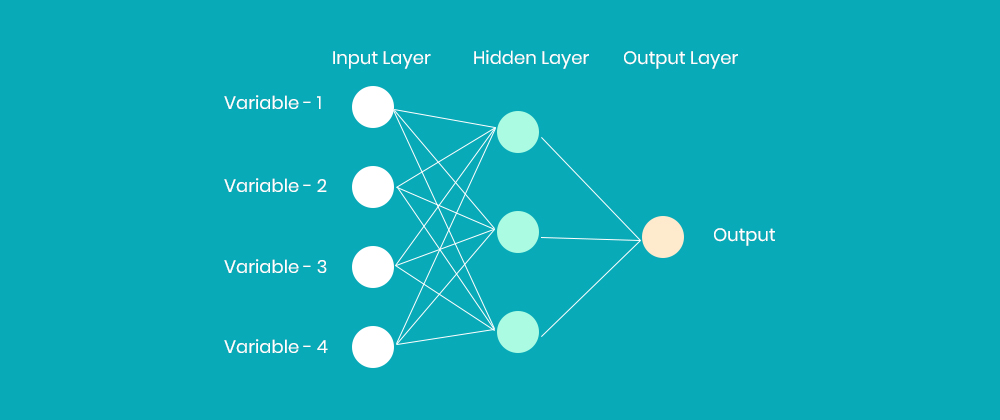

This Neural Network is considered to be one of the simplest types of artificial neural networks. In a feedforward neural network, the data passes through the different input nodes till it reaches the output node.

The Applications of this Network includes Computer vision as the target classes are difficult to classify. It is also useful for Speech recognition as it requires to identify voices using patterns and there is abundant input information so it is difficult to zero in on the exact voice.

Simple classification also uses Feed forward network as traditional ML based classification algorithms have various limitations. Face recognition deals with simple image processing hence feed forward network systems are incorporated in this as well making it easier.

When the input data travels in only a single direction, passing through artificial neural nodes and exiting through output nodes it is the simplest form of neural nets. Where hidden layers may or may not be present, input and output layers are present there.

They can further be classified into single or multi-layered feed forward neural nets on the basis of the way input data travels. The number of layers in the nodes depend on the complexity of the function that is to be performed. It is designed to perform uni-directional forward propagation but no backward propagation which is a major drawback. Weights are static here.

Activation function is fed by inputs which are multiplied by weights. In order to achieve this, classifying activation function or step activation function is used. For example: The neuron is activated if it is above threshold (usually 0) and the neuron produces 1 as an output. The neuron is not activated if it is below threshold (usually 0) which is considered as 1. They are fairly simple to maintain and are equipped with to deal with data which contains a lot of noise.

There are plenty of advantages of this network, it boasts of being less complex, easy to design & maintain. One way propagation leads to fast and speedy functioning. It is highly responsive to noisy data. The only disadvantage is that due to absence of dense layers and back propagation this cannot be used for deep learning.

2. Radial Basis Function Network

A radial basis function considers the distance of any point relative to the centre. Such neural networks have two layers. In the inner layer, the features are combined with the radial basis function.

Then the output of these features is taken into account when calculating the same output in the next time-step. This network is straightforward and easy to operate as the input is fed and through the two layers the output is ready.

3. Multi-Layer Perceptron

The applications of this network include Speech Recognition, Machine Translation and Complex Classification.

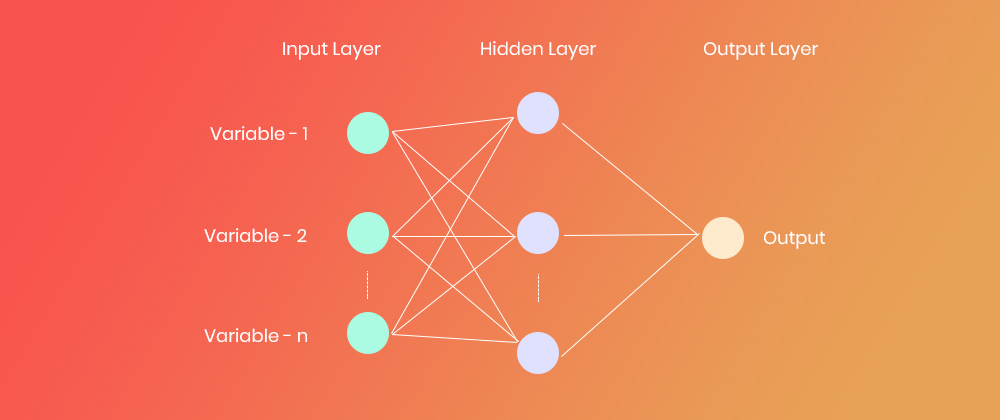

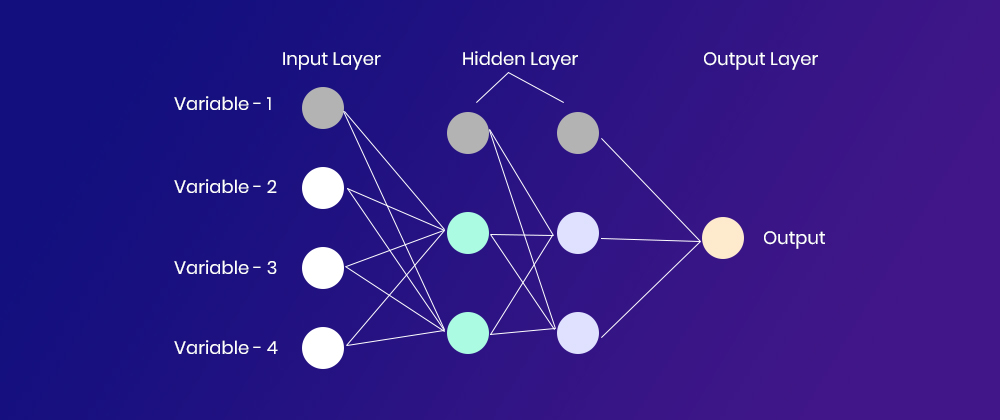

The network has an entry point towards complex neural nets where input data travels through various layers of artificial neurons. In the next layer every single node is connected to all the neurons making it completely connected neural network. Various Input and output layers are present having multiple hidden Layers i.e. at least three or more layers in total.

The network has a bi-directional propagation that includes forward and backward propagation. In it, inputs are multiplied with the weights and then updated in the activation function using backward propagation as it would reduce the loss they incur.

Weights self-adjust on the basis of the difference between the predicted output and training inputs as weights adapt machine learn values from Neural Networks. Further, these Non-linear activation functions are used followed by softmax as an output layer activation function.

Advantage of this network is that due to the presence of dense fully connected layers and back propagation it is used for deep learning while the disadvantages include comparatively complex network to design and maintain and slow processing that depends on the number of hidden layers.

4. Modular Neural Network

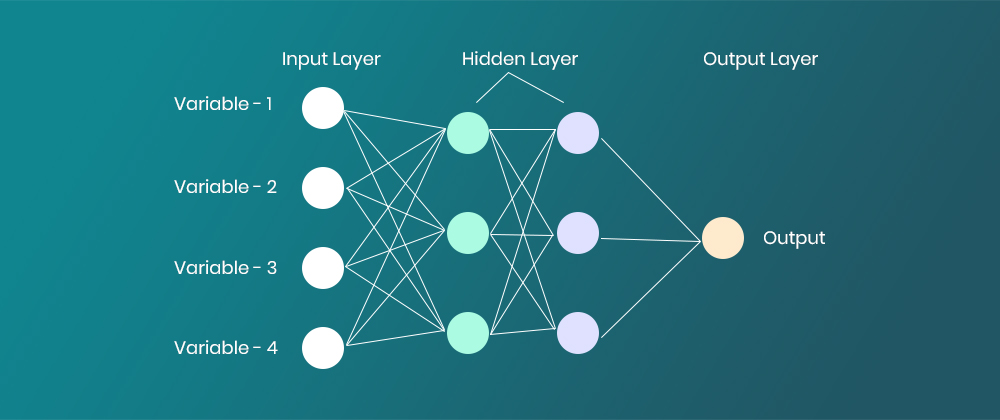

A modular neural network has a number of different networks that function independently and perform sub-tasks. The different networks do not really interact with or signal each other during the computation process. They work independently towards achieving the output.

As a result, a large and complex computational process can be done significantly faster by breaking it down into independent components. The computation speed increases because the networks are not interacting with or even connected to each other. Here’s a visual representation of a Modular Neural Network.

5. Convolutional Neural Network

A convolutional neural network (CNN) uses a variation of the multilayer perceptrons. This network contains one or more than one convolutional layers wherein the layers can either be completely interconnected or pooled.

The convolutional layer uses a convolutional operation on the input prior to sending the output to the next layer of the network. Due to this convolutional operation, the network can be much deeper but with much fewer parameters. Convolutional neural networks, due to this ability portray very effective results in image and video recognition, natural language processing, as well as recommender systems.

Convolutional neural networks further show great results in semantic parsing and paraphrase detection. They are also applied in signal processing and image classification.

Every application would require a separate network that suits it and the way it functions is also important to take note of while deciding on the most suitable network.

Conclusion

This was a brief on the types of networks and how they function differently, each network has a few applications and there are also new neural networks that will be developed in the coming years in order to cope up with the technological advancements of the future.

Keep reading this space for more information and updates on the topic.